Evaluating GPT-4-Turbo

Last Monday (November 6, 2023) OpenAI held their first developer day conference and unveiled several new features. To us, one of the most interesting announcements was the launch of the new GPT-4-Turbo LLM.

If you've used GPT-4 for any length of time you know that, at least through the commoner's API, its latency and cost can be prohibitive for many applications. Because of this, we reserve it only for the most critical parts of our pipeline, and default to GPT-3.5 (or a fine-tuned GPT-3.5) for those where we need speedy answers. But it does take a lot more work to get 3.5 to perform to the high quality level we require, and thus we were immediately excited about the prospect of having a model that's promised to be as good as GPT-4 at lower cost (3x cheaper price for input tokens and a 2x cheaper price for output) and latency, not to mention a 128k token context window that can simplify token-intensive workflows.

At ALU, we have invested into building evaluation tools that allow us to test any changes, including new models, easily and often. It wasn't before Friday when we got a reasonable usage quota for GPT-4-Turbo so we jumped right into evaluating the new LLM with Gaucho.

GPT-4-Turbo vs GPT-4

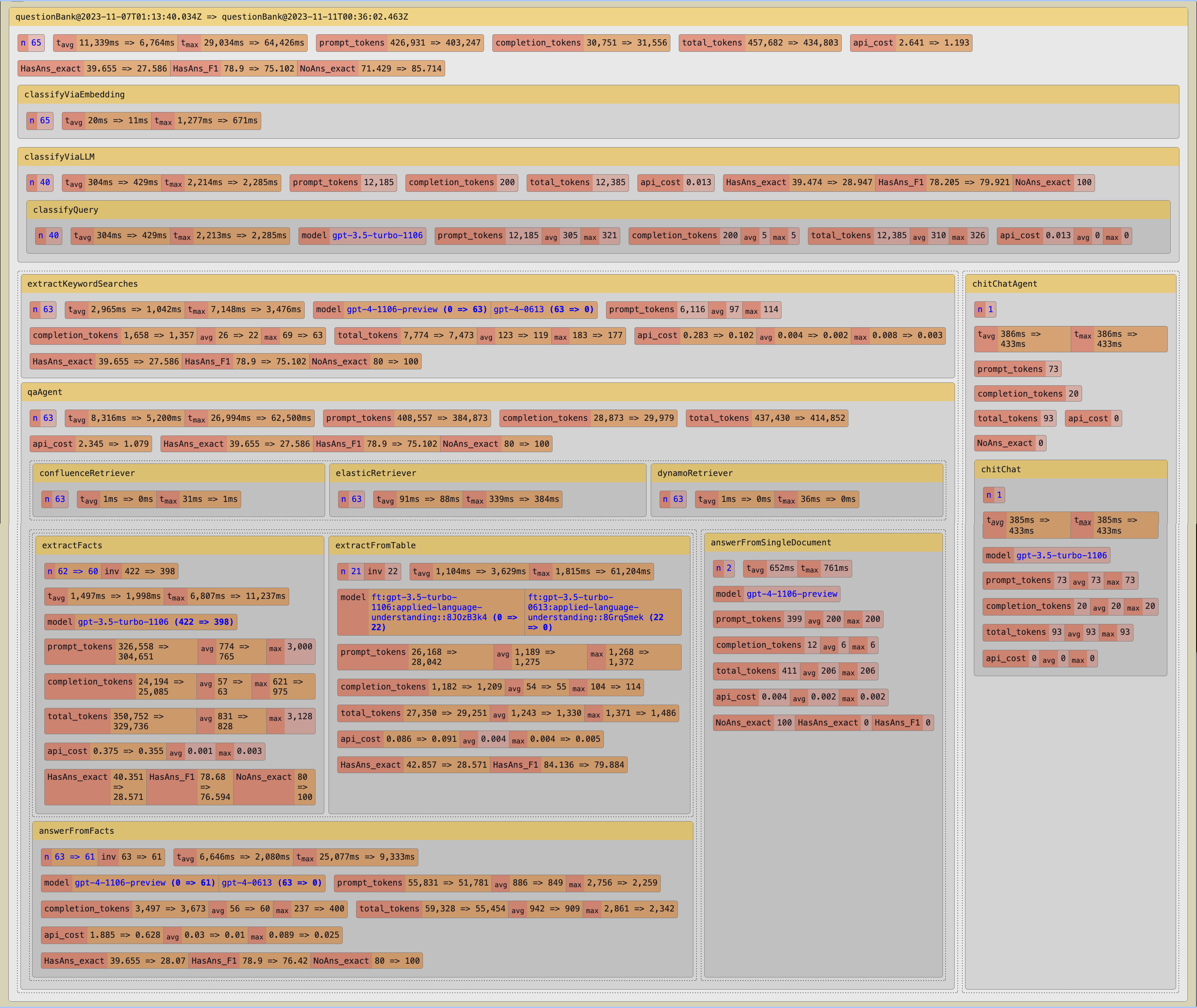

For our first evaluation run, we replaced gpt-4-1106-preview (GPT-4-Turbo) in the stages where we currently use gpt-4-0613 (GPT-4).

While the overall F1 score went down 3.8 points (~4.8%) from 78.9 to 75.1, the average time to answer went down 40% from more than 11 seconds to under 7, and cost for the whole evaluation went down almost 55% from around $2.6 to around $1.2.

There are important nuances. If you look carefully at the image below, you'll notice that the overall NoAns_exact score increased quite a bit. This score relates to the ability of the model not to answer questions that shouldn't be answered because, for example, there are no relevant facts present in the source document dataset. We need to further analyze if GPT-4-Turbo is indeed better at preventing hallucination or simply is more reluctant to answer questions. Initial detail observations indicate that some of the gains in this score may actually be real. We plan to add new metrics to Gaucho to better assess this.

We don't pay as much attention to HasAns_exact which indicates that the answer matches perfectly one of the gold answers. It decreased very significantly, and this could be attributed to a different level of verbosity or other reasons. Again, this is something that we're analyzing further.

Focusing on the stages of our pipeline where actually we use GPT-4 we can see that in extractKeywordSearches the average latency went down from 2,965ms to 1,042ms and the total cost went down from 0.283 to 0.102, both around 65%. And in our most critical stage, answerFromFacts, average latency went down almost 69% from 6,646ms to 2080ms and average cost went 66% down from $0,03 per query to $0,01 (note that there were 2 fewer requests made in this stage with GPT-4-Turbo because two queries were routed to the answerFromSingleDocument stage instead. Such are the joys of evaluating LLMs that small changes earlier on can lead to larger changes later in the pipeline.)

GPT-4-Turbo vs GPT-3.5-Turbo

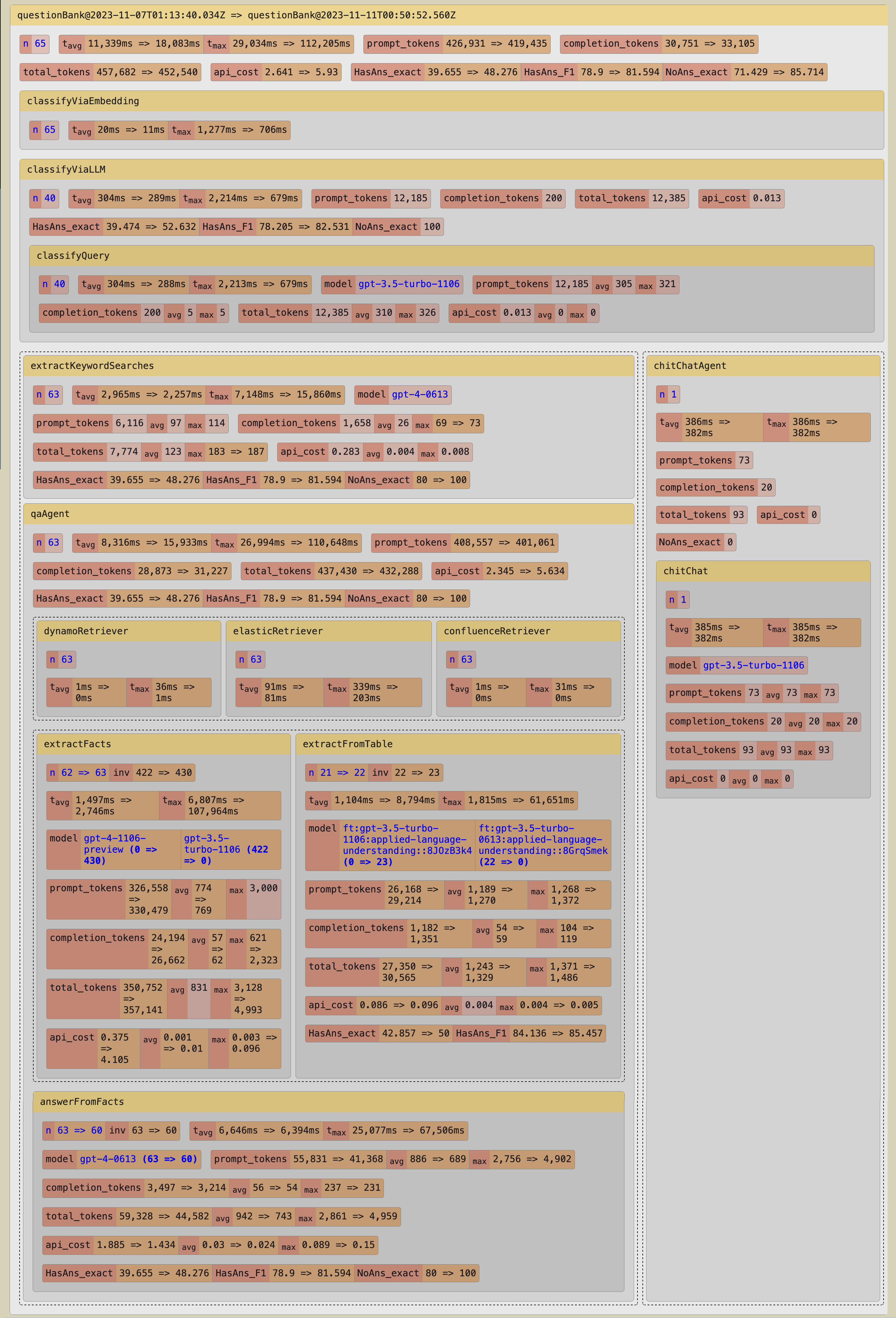

We also wanted to see how much better GPT-4-Turbo is against GPT-3.5-Turbo, which has been our workhorse for some of the more intensive parts of the pipeline, in particular the fact extraction step.

After replacing gpt-3.5-1106 with gpt-4-1106-preview in the extractFacts stage of the pipeline, we noticed a noticeable improvement in quality, with all quality metrics increasing substantially. While F1 went up 2.7 points (3.3%), NoAns_exact jumped up by 14.3 points (16.7%) which is nothing short of dramatic when combined with a large increase also in HasAns_exact of 8.6 points (17.9%). This indicates that the system is both hallucinating less (or being more assertive at saying that there is no answer) and recalling more precisely the facts from the sources.

Conversely, overall latency went up 45% in the extractFacts step (37.3% overall) and cost up 90.7% (55.5% overall).

Conclusion

Evaluations are a great way to separate the hype from the facts and get a concrete idea of how well a new model will perform in your system.

While all results are open to variability (especially when assessing a hosted model through an API where we don't control batching, concurrence and quota throttling), regularly performing end-to-end evaluations is the only way to make sure that the system is working within acceptable quality, latency and cost parameters, and offers a solid path for continuously improvement.

GPT-4-Turbo is not going to immediately replace all the other models in our pipeline, but it's a good addition to the palette of options we have available. We will continue to use a variety of LLMs, particularly fine-tuned models, and we will continue to evaluate new options as they become available.