Fine-tuning OpenAI's GPT-3.5

There are very few – probably none – of our large language model prompts that I am completely happy with. Historically, like pretty much everyone in the industry, we leaned on "prompt engineering". I think this is an unfortunate term: it often feels more like playing "prompt whack-a-mole" than actual engineering.

This is not to say that improvements in our prompts haven't yielded good improvements in output; they certainly have. Recently however, with our new table processing system, we had reached the limits of what we could do simply by tweaking our prompt.

For context, our table processing prompt relies on providing an overview of the table structure, and a fairly large function definition for OpenAI's function calling. Instead of allowing the LLM to write arbitrary SQL which is certainly tempting, we have far more control by providing a structured schema that we call "pseudo-SQL". We then have our own engine that can consume this "pseudo-SQL", allowing us fine-grained control to add and modify features and capabilities.

As we add functionality, we iterate through multiple variations of the prompt and function definition in order to find the path of least resistance for the LLM to comply. We seek to identify a sort of "natural" tendency of the LLM to answer correctly to the prompt. For example, we wanted to add an operation to p-SQL that would compute the absolute difference between two columns, and we started by creating an operation to perform the abs() function over the values of a column. Our hope was that the LLM would first create a new column by subtracting two existing ones, and then apply the abs() function to the new column. Alas, the LLM stopped at computing the difference and seldom tried to compute the absolute value no matter how much we hinted at it (also, this is tricky because the prompt has to be generic enough for all sorts of queries, so it needs to be balanced for generality to avoid breaking something else). Then, we tried a different idea: adding a new "two-in-one" operation that would subtract two columns and compute the absolute value of the result, and named it ABS_DIFF. This was better, but still the LLM didn't seem convinced to use it consistently. After several iterations and evaluations thereof, we found that the LLM has happiest with the name SUB_ABS for the operation, and with that name it required almost no explanations in order to do the right thing when the query required it. See what I meant by "prompt whack-a-mole"? This game keeps our accuracy and reliability high while allowing us to be more token-frugal.

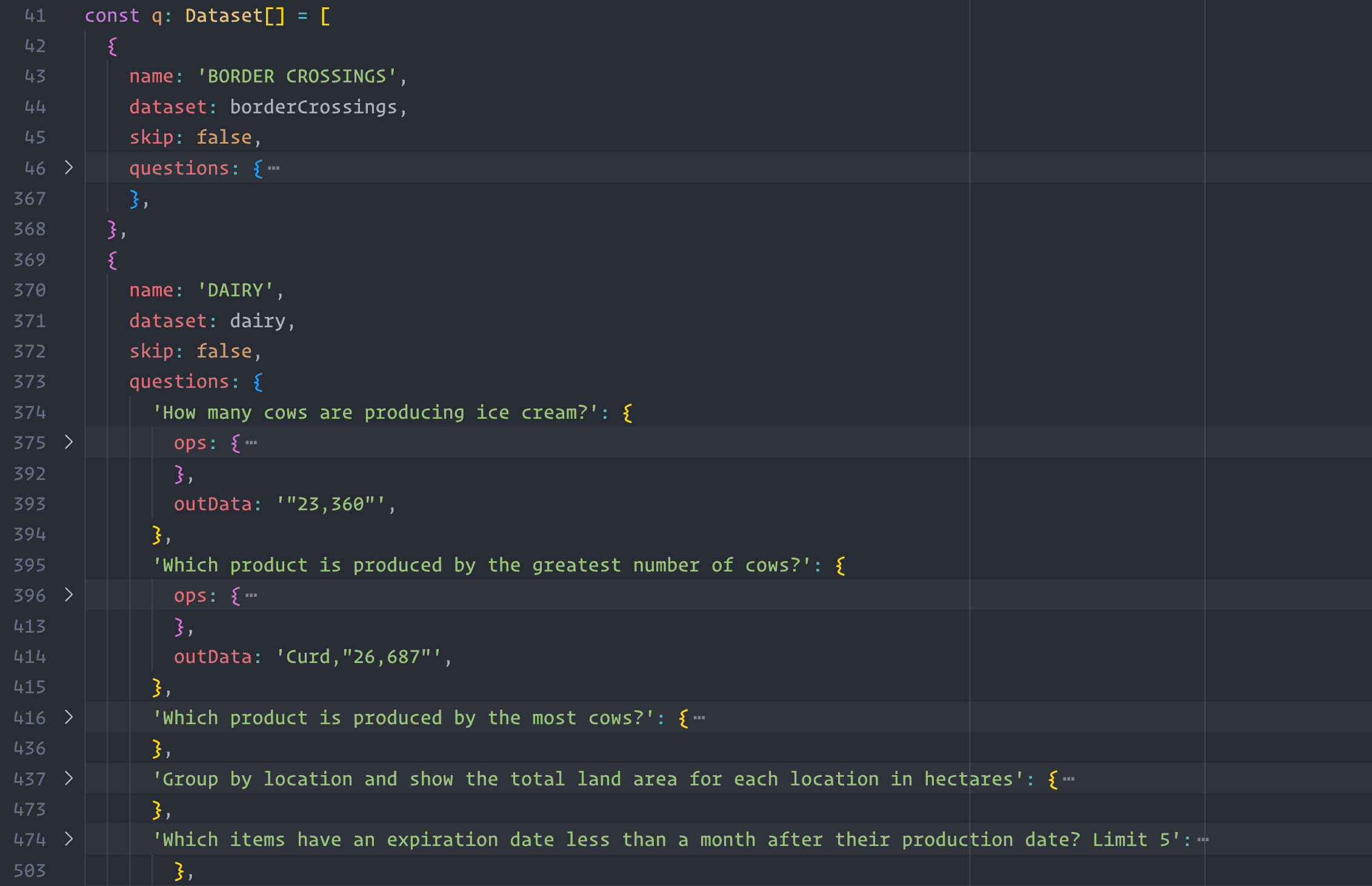

But there is a side to all this that is decidedly engineering too. Without a rigorous evaluation framework, changing prompts is very often taking one step forward and two steps back. So we have developed robust datasets to assess and validate all of our experiments. This, as it turns out, is very important for fine-tuning.

What tempted us to try fine-tuning was a desire for more control. We believe using more varied (and, in the future, potentially in-house) models will allow us to better fine-tune quality and costs, along with other concerns such as privacy and confidentiality. We felt that fine-tuning GPT-3.5 for our p-SQL generator was a good first-step in this direction.

I built a training dataset with a bit over 250 examples. While the size is still relatively small (but growing), it's made up of high-quality, manually-curated examples that cover a wide range of queries and data. Each example contains a query, a reference to an input data table, and both:

- The desired LLM output, which we can use to train / fine-tune.

- The expected final result, which we can use to make sure the p-SQL returned by the LLM executes correctly.

Creating the training set was the hard part. The fine-tuning itself is actually incredibly easy through the OpenAI interface, and so far we're very happy with the results.

What have we learned from fine-tuning GPT3.5?

So far I have four main takeaways from this experience:

- It takes very few examples to have a meaningful impact on the performance of the model. We saw value immediately even with just a few dozen examples.

- Quality, quality, quality! Because it takes so few examples to make an impact, even a single bad example can really throw things. This is doubly important with OpenAI's function calling and JSON output. If you provide any invalid JSON as an example, you destroy the models reliability in providing parseable JSON.

- Huge latency improvements. I really do mean huge here. On the low end we saw latency improvements of 3-4x, but on the high end we are seeing improvements on the order of 9-10x faster inference compared to the base GPT-3.5-turbo. It's possible that some customers could justify the 9x price increase through latency improvements alone.

- Fine-tuning complex JSON object responses to a function call can hurt the model's ability to output valid JSON. Say you want the model to call your function with an object with three properties, but some properties are optional and the order of the properties doesn't matter. What we see happen is the model will prematurely close the JSON object between properties. Our hypothesis is that during fine-tuning sometimes it sees different properties ending the JSON thus creating a possibility for it to mess up when the property isn't the final one in the output. We have found this to be mostly fixable "in-post" with some ugly regular expressions, but its an important gotcha to keep an eye out for.