Introducing X-ray

I am excited to announce the launch of a unique new feature for Connie AI we are calling "X-ray". Starting today, Connie not only offers correct and concise answers to any question that can be answered with the content of your Confluence site, but it also lets you see exactly how it arrived at it.

In a recent post, Evaluating Atlassian Intelligence, we saw how quality can not be taken for granted. For AI to gain trust, we concluded, requires not only correctness, but transparency: being able to confirm the answers AI provides.

Trusting AI

Since the very beginning, our North Star has been to make sure Connie is accurate and reliable. We have worked very hard to ground the results in the data present in your Confluence spaces, and only that data, and to minimize the occurrence of "hallucinations". We are proud of the progress we've made, but we believe this is not and may never be enough for mission-critical trust.

Throughout the education system, you've likely encountered homework or exams where giving the correct answer wasn't sufficient. These questions required that you "show your work". In college I was very fortunate to take a course on computer science theory taught by Alfred Aho. The way exams worked in this class was unique from all my other classes. They were generally only a handful of questions, where each question was yes/no (or in this case true/false). Critically, providing solely the correct set of yes/no answers was not enough to do well. Each question required that you justify you answer

Are the recursive languages closed under the Kleene star operator? Briefly

justify your answer.

This brings us to today's launch of X-ray for Connie AI.

Radical transparency

In this blog, we've talked in the past about some of the inner workings of Connie. The key insight that allowed the leap to X-ray was that all of the intermediary information that we process and create when answering a question in Confluence is also the perfect information for a user to fact-check Connie.

Today, confirming that the answer you got from an AI is correct typically requires opening and checking the sources provided or, even worse, manually performing the search that the AI was trying to help you avoid. What a waste! If people are going to use, much less pay for, AI question answering, it needs to improve their productivity, not hamper it.

There is a better way.

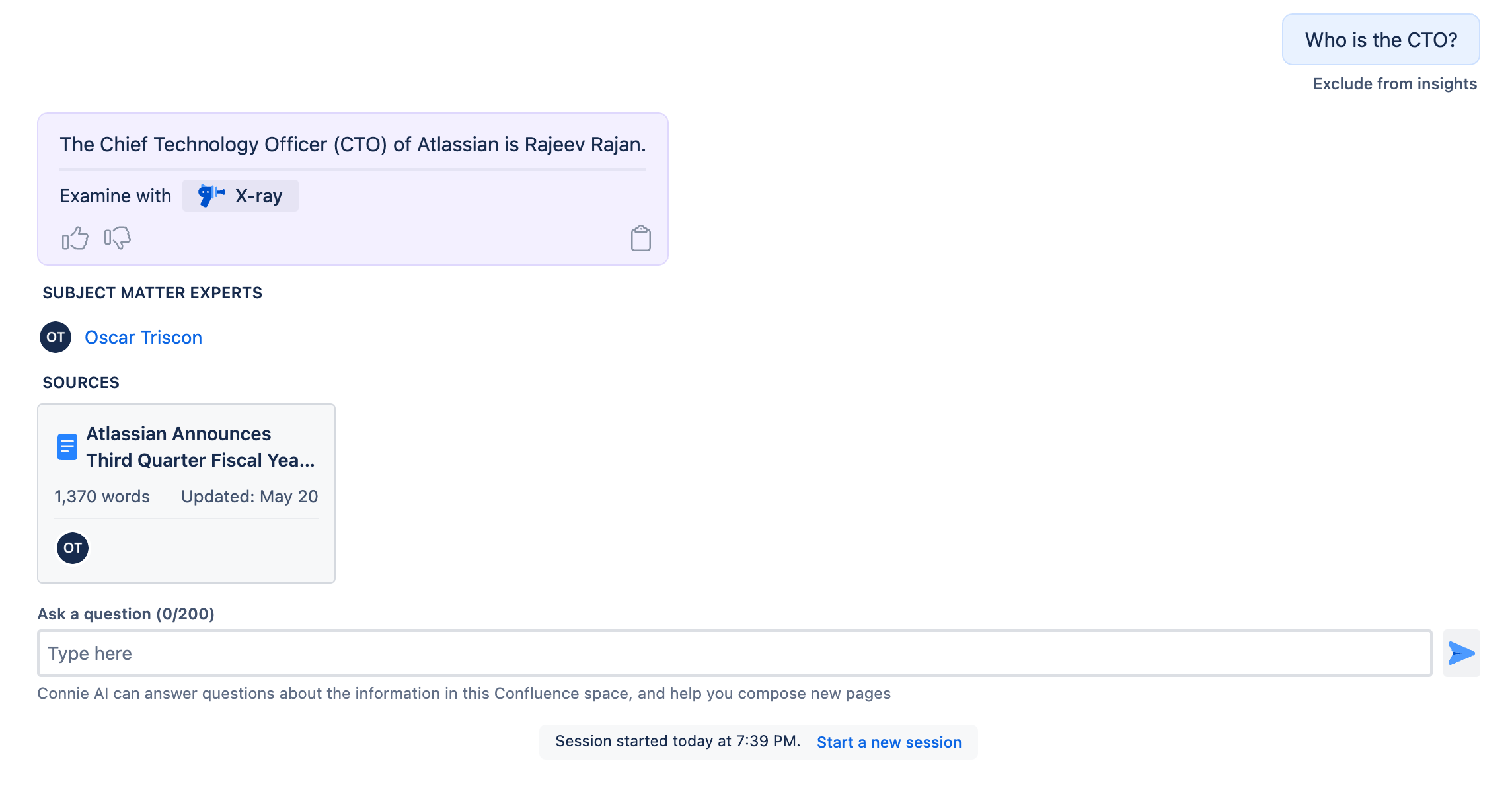

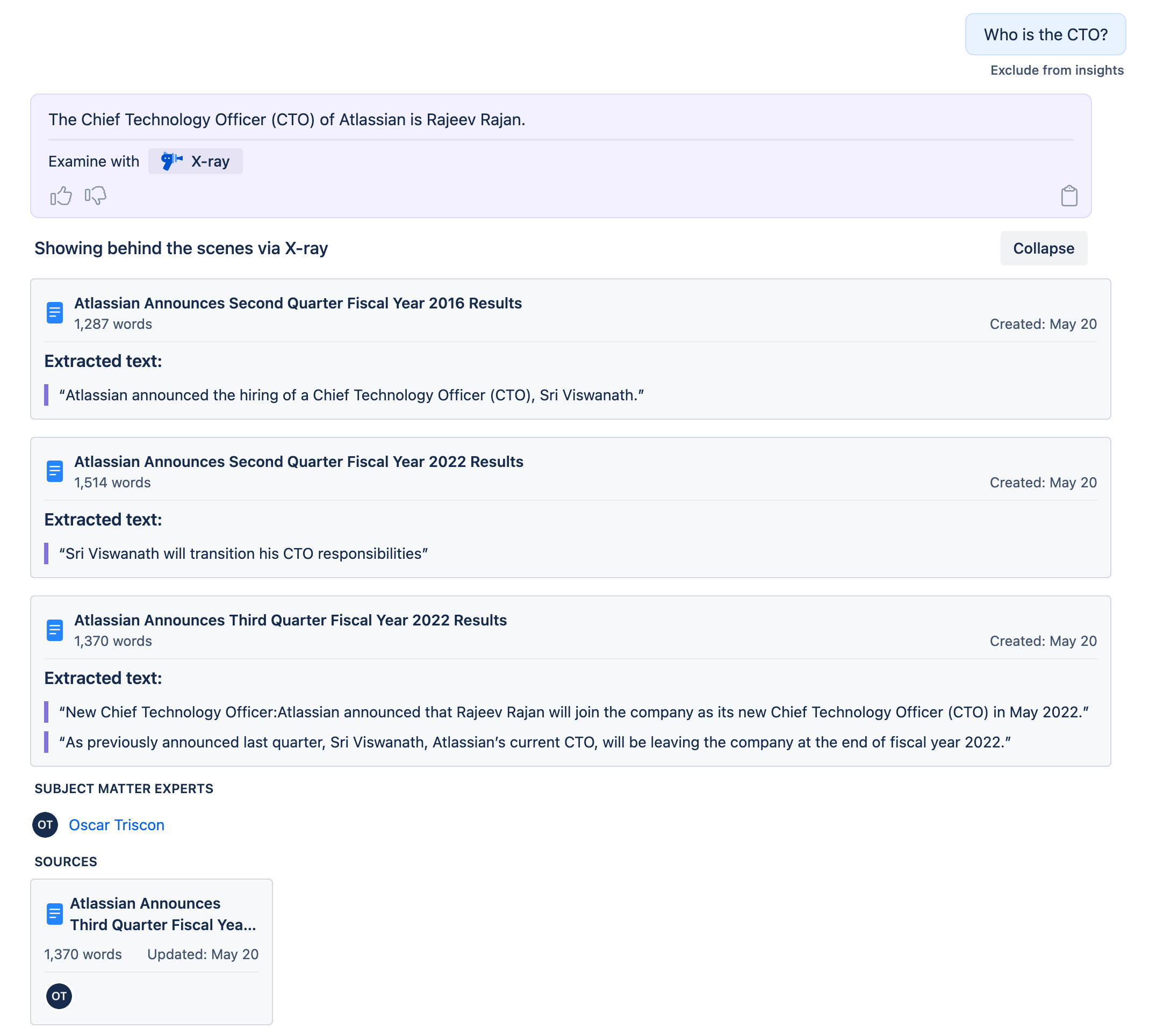

Here is what happens when you click it...

To be honest, this was scary to me. We are showing you some of the inner workings of Connie AI, flaws and all. We do hope that you'll let us know about the flaws as well as the wins.

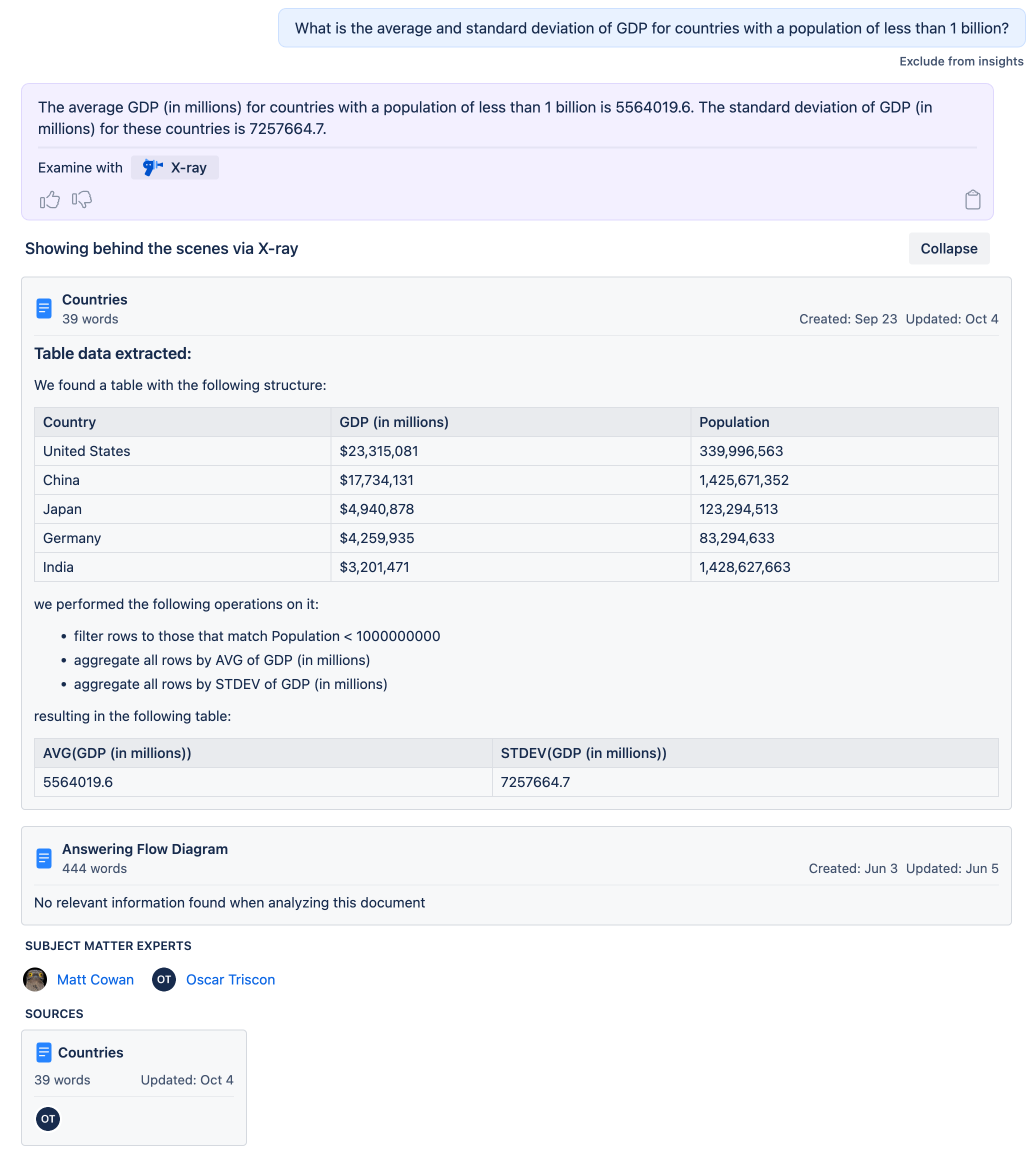

We talked in an earlier post about our work on understanding tables and structured data. X-ray gives Connie the space to really show what its capable of:

With X-ray, you see which documents Connie analyzed when answering your question, as well as what information was extracted from them in order to feed into the answer. Any extracted text or tables you see in X-ray are guaranteed to be verbatim from the document, no AI hallucinations allowed. We are optimistic that pushing for further transparency and "showing your work" with AI will help us build a better product, and help you trust Connie AI.

As always, you can reach us at contact@alu.ai if you have questions or would like to discuss further.